Understanding Z-Image Turbo: Efficient Image Generation Explained

- Z-Image Team

- Technology

- 01 Dec, 2024

Image generation has seen remarkable progress in recent years, with models producing increasingly realistic and detailed outputs. However, this progress has often come at the cost of enormous model sizes and computational requirements. Z-Image Turbo takes a different approach, demonstrating that efficiency and quality can coexist.

The Challenge of Scale

Traditional diffusion models for image generation often contain tens of billions of parameters. While these large models can produce impressive results, they present several challenges:

- High computational costs for training and inference

- Significant memory requirements that limit accessibility

- Slow generation times that hinder rapid iteration

- Environmental impact from energy consumption

These limitations mean that state-of-the-art image generation has been largely restricted to organizations with substantial computational resources.

A New Approach

Z-Image Turbo addresses these challenges through careful architectural design and training strategies. With just 6 billion parameters, the model achieves results comparable to models ten times its size. This efficiency comes from several key innovations:

Single-Stream Diffusion Transformer

The architecture uses a streamlined diffusion transformer that processes information more efficiently than traditional multi-stream approaches. This design reduces redundancy while maintaining the model's ability to capture complex patterns.

Optimized Training Process

Rather than simply scaling up model size, the Z-Image team focused on optimizing the training process. This includes:

- Careful dataset curation to maximize learning efficiency

- Advanced training techniques that improve convergence

- Distillation methods that transfer knowledge from larger models

Reduced Inference Steps

One of the most impressive aspects of Z-Image Turbo is its ability to generate high-quality images in just 8 steps. Traditional diffusion models often require 50 or more steps to achieve comparable quality. This reduction dramatically speeds up generation time without sacrificing output quality.

Real-World Impact

The efficiency of Z-Image Turbo has practical implications for a wide range of users:

Accessibility

By running on consumer-grade hardware with less than 16GB of VRAM, Z-Image Turbo makes advanced image generation accessible to individual creators, small studios, and researchers who don't have access to expensive computing infrastructure.

Speed

Faster generation times enable rapid iteration and experimentation. Artists and designers can explore more ideas in less time, leading to more creative outcomes.

Cost Efficiency

Lower computational requirements translate to reduced costs for both training and inference. This makes it economically viable to use image generation in applications where cost was previously prohibitive.

Environmental Considerations

Smaller, more efficient models consume less energy, reducing the environmental impact of AI-powered image generation.

Technical Performance

Despite its compact size, Z-Image Turbo delivers impressive technical performance across multiple dimensions:

Photorealistic Quality

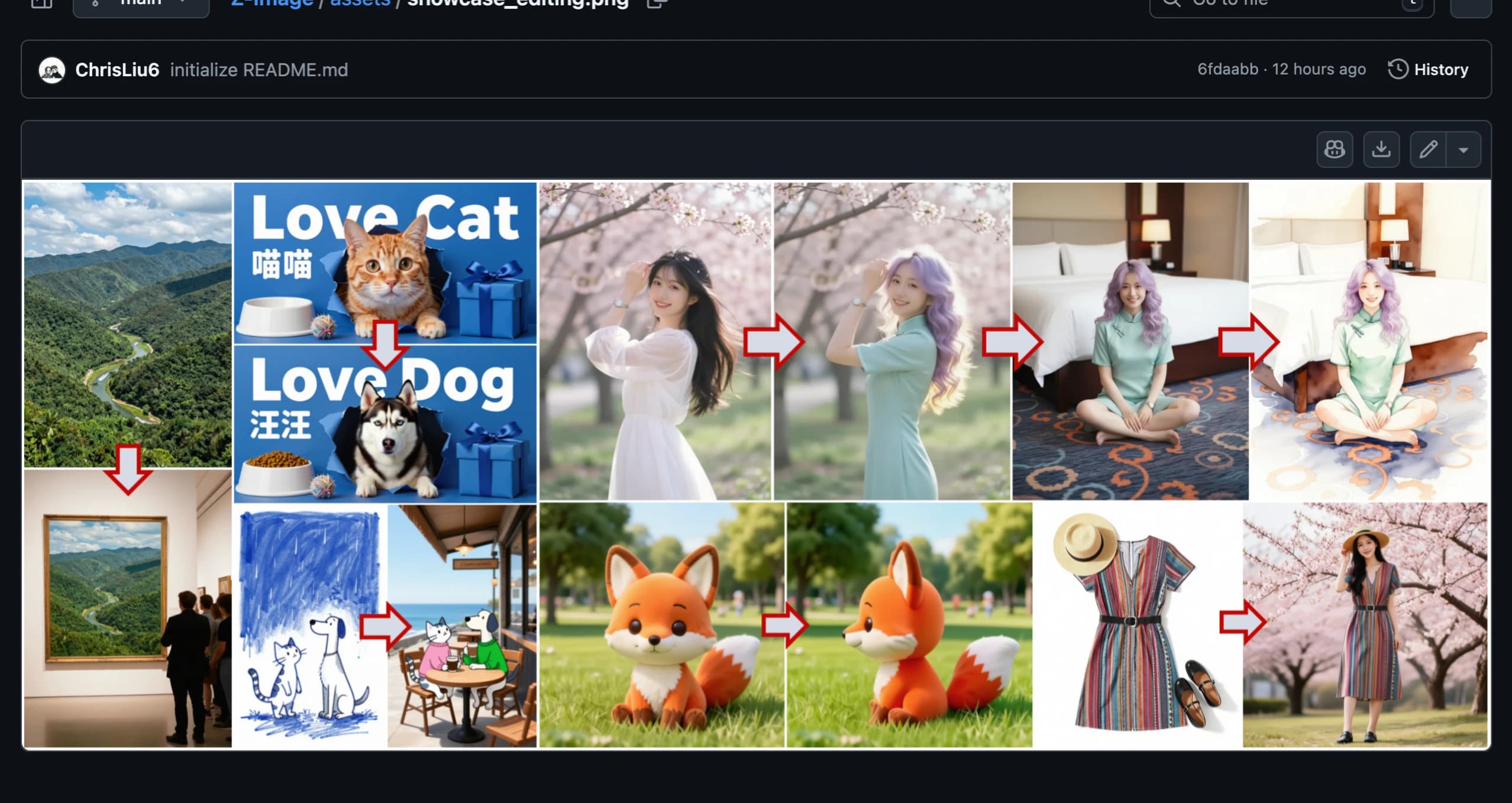

The model produces images with fine details, accurate textures, and realistic lighting. In blind comparisons, outputs from Z-Image Turbo are often indistinguishable from those of much larger models.

Prompt Adherence

The model demonstrates strong ability to follow complex prompts, accurately capturing multiple objects, specific styles, and detailed scene descriptions.

Bilingual Capabilities

Native support for both English and Chinese makes Z-Image Turbo particularly valuable for international projects. The model can understand prompts in either language and accurately render text in both scripts within generated images.

Practical Applications

The efficiency and quality of Z-Image Turbo enable a wide range of applications:

- Content creation for digital media and marketing

- Concept art and design visualization

- Rapid prototyping of visual ideas

- Educational demonstrations of AI capabilities

- Research in computer vision and generative models

Looking Ahead

Z-Image Turbo demonstrates that the future of image generation doesn't necessarily lie in ever-larger models. Instead, careful optimization and architectural innovation can deliver high-quality results with significantly reduced computational requirements.

This approach opens new possibilities for making advanced AI capabilities more accessible, sustainable, and practical for real-world applications. As the field continues to evolve, we can expect further improvements in efficiency without compromising on quality.

Getting Started

If you're interested in exploring Z-Image Turbo, the model is fully open source with complete documentation available. Whether you're a researcher, developer, or creator, you can start experimenting with the model today and see firsthand how efficient image generation can be.

The combination of quality, speed, and accessibility makes Z-Image Turbo an excellent choice for anyone looking to incorporate image generation into their workflow.